There are a few ongoing debates in the world of digital design. Things like Should designers code?”, “What’s the value of design?”, “UX versus UI,” and, perhaps most fundamentally, “Is everyone a designer?” To get a taste for the flavor of that last one, you can step into this Twitter thread from a little while back (TLDR: It didn’t go super well for anyone):

I legitimately don’t understand why so many people are peeved at the idea that everyone is a designer.

— Peter Merholz (@peterme) June 22, 2019

To be clear at the outset, I don’t care if everyone is a designer. However, I’ve been considering this debate for a while and I think there is something interesting here that’s worth further inspection: Why is design a lightning rod for this kind of debate? This doesn’t happen with other disciplines (at least not to the extent it does with design). Few people are walking around asserting that everyone is an engineer, or a marketer, or an accountant, or a product manager. I think the reason sits deep within our societal value system.

Design, as a term, is amorphous. Technically you can design anything from an argument to an economic system and everything in between, and you can do it with any process you see fit. We apply the idea of design to so many things that, professionally, it’s basically a meaningless term without the addition of some modifier: experience design, industrial design, interior design, architectural design, graphic design, fashion design, systems design, and so on. Each is its own discipline with its own practices, terms, processes, and outputs. However, even with its myriad applications and definitions, the term “design” does carry a set of foundational, cultural associations: agency and creativity. The combination of these associations makes it ripe for debates of ownership.

Agency

To possess agency means to have the ability to affect outcomes. Without agency we’re just carried by the currents, waiting to see where we end up. Agency is control, and deep down we all want to feel like we have control. Over time our cultural conversation has romanticized design, unlike any other discipline, as the epicenter of agency, a crossroads where creativity and planning translate into action.

At its core, design is the act of applying structure to a set of materials or elements in order to achieve a specific outcome. This is a fundamental human need. It’s not in our nature to leave things unstructured. Even the concept of “unstructured play” simply means providing space for a child to design (structure) their own play experience — the unstructured part of it is just us (adults) telling ourselves to let go of our own desire to design and let the kids have a turn. We hand agency to the child so they can practice wielding it.

There are few, if any, activities that carry the same deep tie to the concept of agency that design does. This is partially why no one cares to assert things like we’re all marketers or we’re all engineers. They don’t carry the same sense of agency. Sure, engineers have the ability to make something tangible, but someone had to design that thing first. You can “design” the code that goes into what you are building, but you do not have the agency to determine what is being built (unless you are also designing it).

If we really break it down, nearly every job in existence is either a job where you are designing, or a job where you are completing a set of tasks in service to something that was designed, or a job where your tasks are made possible by some aspect of design, or some mix of the three. Either way, the act of “designing” is what dictates the outcomes.

Creativity

The other key aspect of our cultural definition of design is creativity. Being creative is a deep value of modern society. We lionize the creatives, in the arts as well as in business. And creativity has become synonymous with innovation. There is a reason that for most people, Steve Wozniak is a bit player in the story of Steve Jobs.

The idea of what it means for an individual to be creative is something that has shifted over time. In her TED Talk, Elizabeth Gilbert discusses the changing association of creative “genius.” The historical concept, from ancient Greece and Rome, was that a person could have a genius, meaning that they were a conduit for some external creative force. The creative output was not their own; they were merely a vessel selected to make a creative work tangible. Today, we talk about people being a genius, meaning they are no longer a conduit for a creative force, but instead they are the creative force and the output of their creativity is theirs.

This seemingly minor semantic shift is actually seismic in that it makes creativity something that can be possessed and, as such, coveted. We now aspire to creativity in the same way we aspire to wealth. We teach it and nurture it (to varying degrees) in schools. And in professional settings, having the ability to be “creative” in your daily work is often viewed as a light against the darkness of mundane drudgery. As we see it today, everyone possesses some level of creativity, and fulfillment is found in expressing it. When we can’t get that satisfaction from our jobs we find hobbies and other activities to fulfill our creative needs.

So, our cultural concept of design makes tangible two highly desirable aspects of human existence: agency and creativity. Combine this with the amorphous nature of the term “design” and suddenly “designer” becomes a box that anyone can step into and many people desire to step into. This sets up an ongoing battle over the ownership of design. We just can’t help ourselves.

—

Take again, as proxy, our approach to the arts. While we lionize musicians, actors, artists, and other creators, we simultaneously feel compelled to take ownership of their work, critiquing it, questioning their creative decisions, and making demands based on our own desires. The constant list of demands and grievances from Star Wars fans is a perfect example. Or the fans who get upset if a band doesn’t play their favorite hit song at a show. Even deeper, we feel a universal right to remix things, cover things, and steal things.

Few people want to own the nuts-and-bolts process of designing, but everyone wants to have their say on the final output.

But just like other things we covet, what we desire is ownership over the output, not the process of creating it. For example, we’re willing to illegally download music, movies, books, games, software, fonts, and images en masse, dismissing the work it took to create it and sidestepping the requirement to compensate the creator.

A similar phenomenon occurs in the world of design. Few people want to own the nuts-and-bolts process of designing, but everyone wants to have their say on the final output. And because design represents the manifestation of agency and creativity there is an expectation that all of that feedback will be heard and incorporated. Pushing back on someone’s design feedback is not just questioning their opinion, it’s a direct assault on their sense of agency.

As a result, final designs are often a Frankenstein of feedback and opinions from everyone involved in the design process. In contrast, it’s rare to see an engineer get feedback on the way code should be written from a person who doesn’t have “engineer” in their title. It’s also even more rare to see an engineer feel compelled to actually take that sort of feedback and incorporate it.

Another place this kind of behavior crops up is in the medical world. Lots of people love to give out health advice or question the decisions of doctors. However, few people would say “everyone is a physician.”

And I think this represents a critical point. There are two reasons that people do not assert that they are a physician unless they are actually a physician:

- We have made a cultural decision that practicing medicine is too risky to allow just anyone to do it. You can go to jail for practicing medicine without a license.

- No one actually wants to be responsible for the potential life and death consequences of the medical advice they give.

This highlights a third aspect of our cultural definition of design: Design is frivolous. Despite the connection between design and agency, many still view “designing” as trite and superficial.

Humans are sensory creatures. We absorb much of the world around us through visual, auditory, tactile, and olfactory inputs. Because of this, when we think of the agency inherent in design most of us think about it in terms of the aesthetic value of the output. Basically, we continually conflate design with art. If you don’t believe me, watch any episode of Abstract on Netflix. This is also why design programs are still housed in art schools.

So when most people critique designs, their focus is on aesthetics—colors, fonts, shape—and their reactions are based on the feelings and emotions those aesthetic values elicit. While aesthetics have an important role to play, they are only a piece of the overall puzzle. It is much harder for people to substantively critique the functional merits of a design or understand the potential impacts a design decision can have. That is partially why so many of our design decisions end up excluding certain groups of users or creating other unexpected negative consequences: We don’t critique our decisions through that lens.

Everyone is a designer because there is no perceived ramification for practicing design.

Because of this narrow, aesthetic-based view, the outcomes of the design process feel relatively inconsequential to many people, especially in comparison to something like the outcomes of a medical diagnosis. And if there are no consequences, why shouldn’t we all participate? Everyone is a designer because there is no perceived ramification for practicing design.

Of course, in reality, there are major consequences for the design decisions we make. Consequences that are more significant, on a population level, than many medical decisions a doctor makes.

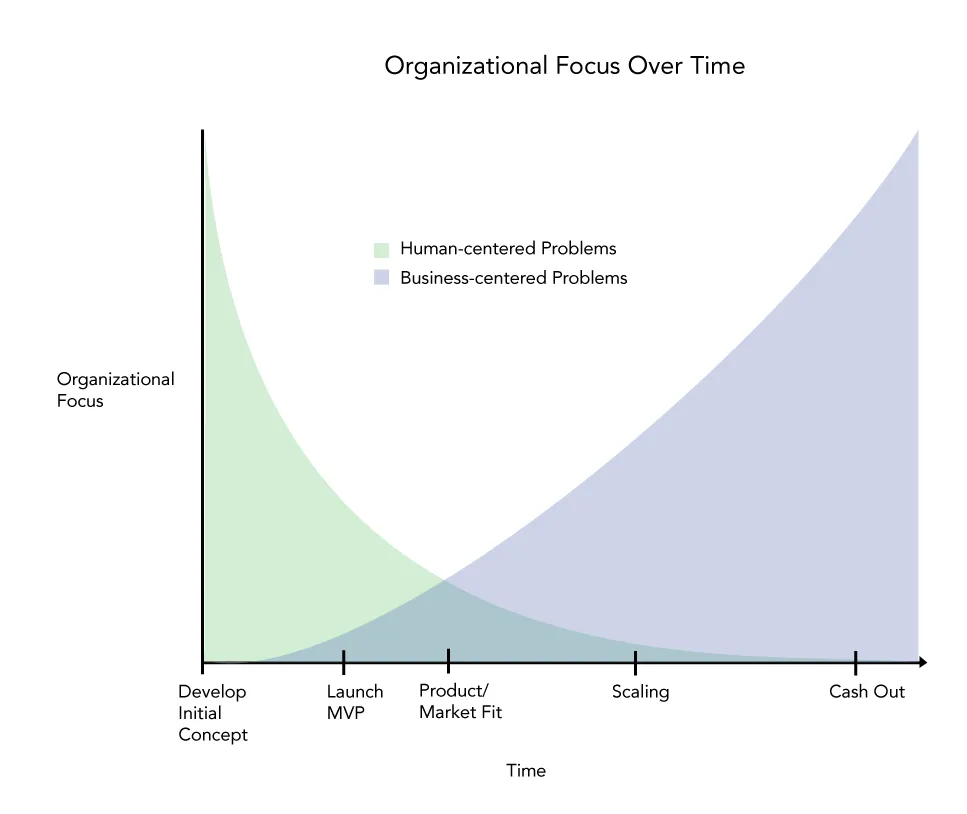

What I’ve come to realize is that the idea that everyone is a designer is not really about some territorial fight for ownership; it’s actually a symptom of our broken culture of technology. Innovation (creativity) is our cultural gold standard. We push for it at all costs and we can’t be bothered by the repercussions. Design is the tool that gives form to that relentless drive. In a world of blitzscaling and “move fast and break things” it serves us to believe that our decisions have no consequences. If we truly acknowledged that our choices have real repercussions on people’s lives, then we would have to dismantle our entire approach to product development.

Today, “everyone is a designer” is used to maintain the status quo by perpetuating the illusion that we can operate with impunity, in a consequence-free fantasy land. It’s a statement that our decisions have no weight, so anyone can make them.

I said at the beginning that I don’t care if everyone is a designer, and I mean that. If we keep thinking of this debate as some territorial pissing match then we continue to abdicate our real responsibility, which is to be accountable for the things we create.

It really doesn’t matter who is designing. The only thing that matters is that we change our cultural conversation around the consequences of design. If we get real about the weight and impact that design decisions have on our world, and we all still want to take on the risk and responsibility that comes with that agency, then more power to all of us.

—

“Why the ‘Everyone Is a Designer’ Debate Is Beside the Point” was originally published in Medium on January 22, 2020.